Introduction

Live video streaming relies on efficient protocols to deliver content with minimal delay. While RTMP has been the industry standard for years, the SRT protocol is gaining traction for its low-latency capabilities. But what makes SRT different? When should you use it instead of RTMP? This article breaks down the key differences, advantages, and real-world performance of both protocols.

What is the SRT Protocol?

According to [Wikipedia](https://en.wikipedia.org/wiki/Secure_Reliable_Transport), SRT (Secure Reliable Transport) is an open-source video transport protocol that uses UDP (User Datagram Protocol) for data transmission. It was developed to solve challenges like packet loss and high latency in unstable networks.

Key Features of SRT:

✅ Low Latency (1-2 seconds) – Ideal for live broadcasts.

✅ Error Recovery – Automatic retransmission of lost packets.

✅ Encryption – Secure streaming with AES-128/256.

✅ Firewall-Friendly – Works well across restricted networks.

SRT vs. RTMP: Key Differences

While both protocols are used for live streaming, they have fundamental differences in performance and use cases.

| Feature | SRT Protocol | RTMP Protocol |

|---|---|---|

| Transport | UDP-based (faster) | TCP-based (more reliable) |

| Latency | 1-2 seconds | 2-5 seconds |

| Use Case | Long-distance, unstable networks | CDN-based streaming |

| Security | Built-in encryption | Requires RTMPS (SSL) |

Latency Comparison - SRT achieves lower latency because UDP avoids TCP’s handshake and congestion control.

RTMP, while more stable, adds delay due to TCP’s error-checking mechanism.Reliability & Error Handling - SRT dynamically adjusts to network conditions, making it better for unstable connections (e.g., satellite or mobile networks).

RTMP is more predictable but struggles with packet loss in poor networks.Compatibility - RTMP is widely supported by platforms like YouTube Live, Facebook Live, and Twitch.

SRT is growing in adoption but requires encoder/decoder support.

Why is SRT Suddenly Popular?

Rise of Remote Production

Broadcasters need stable, low-latency streaming from field locations.Demand for Sub-Second Streaming

Applications like gaming, auctions, and live sports require near real-time delivery.Better Handling of Unstable Networks

SRT’s error correction outperforms RTMP in high-packet-loss scenarios.

Does SRT Always Reduce Latency? (Real-World Testing)

Some clients report no significant latency difference between SRT and RTMP. Why?

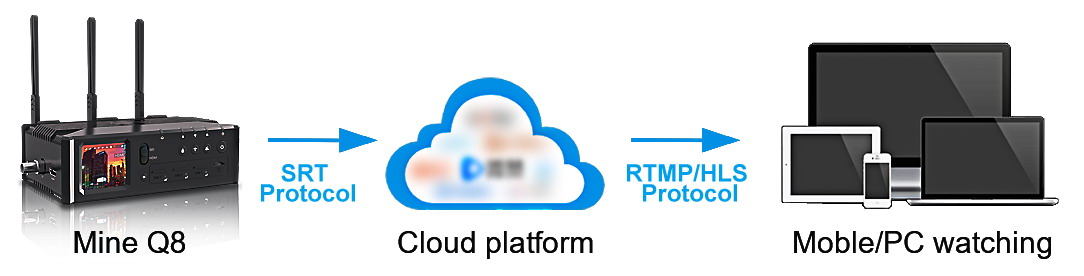

Case Study: Mine-Q8 Streaming Test

- Streaming Setup:

- Camera → SRT Push → Cloud Platform → RTMP/HLS Transcoding → Mobile Users.

Result:

- The final latency depended on the cloud’s output protocol (RTMP/HLS), not SRT.

Key Takeaway:

SRT’s low latency only applies in end-to-end SRT workflows. - If the cloud converts SRT to RTMP/HLS, the delay remains similar to traditional streaming.

When Should You Use SRT?

✔ Point-to-Point Streaming (Encoder → SRT → Decoder)

✔ Unstable Networks (Wi-Fi, 4G/5G, satellite)

✔ High-Security Requirements (AES encryption) When Should You Stick with RTMP?

✔ Broadcasting to CDNs (YouTube, Facebook, Twitch)

✔ Legacy Workflows (Existing RTMP encoders)

✔ When Absolute Lowest Latency Isn’t Critical

Conclusion

SRT is the future for low-latency, secure, and resilient streaming, especially in remote production.

RTMP remains the best choice for platform compatibility and CDN-based delivery.

For true low latency, ensure your workflow uses SRT end-to-end without RTMP/HLS conversion.